Projects

News: Trauma conference london 2024, slides. NewsLetter TraumaNews May 2023.

Old news: Video 2019 of presentation of the project and collaboration with Capgemini Invent –Short slides presentation 2019. -Medias: Podcast Pharmaradio – revue de presse – article BioWorldMedTech

It has been almost 8 years since I have been working with a group of intensive care clinicians who care for trauma patients, i.e. patients who have road accidents, fall from trees, etc., and often suffer from head traumas and hemorrhagic shocks. We are organized as a public/private consortium with the École des hautes études en sciences sociales, École polytechnique, Inria, CNRS, and Capgemini Invent through a skills sponsorship. Over the years, our work has focused on improving data collection (rich data from patient care from the accident scene to hospital discharge), developing causal inference methods to evaluate intervention or treatment effectiveness, recommending therapeutic strategies (optimal treatment policy, transfusion dose, which treatment to give to whom and when, etc.), managing missing information, and creating predictive models for decision-making and patient management in a highly uncertain environment with multiple stakeholders and where every minute counts.

We are starting real-time evaluation of our models in collaboration with SAMU (there will be an application in French ambulances with our predictive models) to quantify the improvement in patient care. There are many scientific challenges associated with the projects: are my predictive models robust to changes in practices and patients? Are my algorithms fair (do I predict equally well for men and women)? How do I restore confidence in predictions (our algorithm sometimes answers: I don’t know!)? How to present results to doctors, how will they react (what we are testing is human-machine interaction) How do I integrate heterogeneous data from European counterparts, etc.? There is a need for all stakeholders to transfer to clinical practice.

The Traumatrix project presents numerous benefits for academic research and is a source of motivation for doctoral and postdoctoral researchers who can quickly test innovations against real-world data and contribute to a societal project. In addition, transitioning from method development to real-time implementation is a particularly exciting.

Research papers can be found here. Below, I put some papers associated to the project and presentations given at the SFAR (French Society of Anesthesia & Intensive Care Medecine) of some projects/papers and internship done with interns or polytechnique students.

- Trauma reloaded: Trauma registry in the era of data science. Anaesthesia Critical Care & Pain Medicine.

- Doubly robust treatment effect estimation with incomplete confounders. Annals Of Applied Statistics (AOAS)

- Causal inference methods for combining randomized trials and observational studies: a review.

- Slide: Prediction of haemorrhagic shock/ Logistic regression with missing data (Useful for propensity scores and IPW with missing values) joint work with W. Jiang, M. Lavielle, M. Pichon. Article.

- Effect of the Tranexomic acid on survival for head trauma patients. Slides: Heterogeneous Treatment effect/CausalInference. Poster. T Alves De Sousa, JP Nadal, T Gauss, JD Moyer, I Mayer, S Wager.

- Effect of Fibrinogen administration on early mortality in traumatic haemorrhagic shock: a propensity score analysis. Hamada, S., Beauchesne J, et al.

Respiratory Diseases:

I work on respiratory diseases with colleagues from Inserm, such as asthma. Even though these diseases result from genetic-environmental interactions, the WHO estimates that by 2050, one in two people will suffer from respiratory diseases, allergies, asthma, etc., due to climate change, air quality, biodiversity loss, etc. This is a major public health issue. To improve prevention, establish new diagnostics and therapeutic practices, we use an exposomic approach. This means we study all social and environmental factors that, combined with patient characteristics, help predict the onset and progression of these diseases. AI is useful for accelerating research by using heterogeneous, multidimensional, and complex data. This is all the more important as the randomised control trial population only covers 10% of those who will receive treatment (Pahus, et. al. 2019), so there is a real need to provide more evidence to know how best to treat patients.

ICUBAM: ICU Bed Availability Monitoring

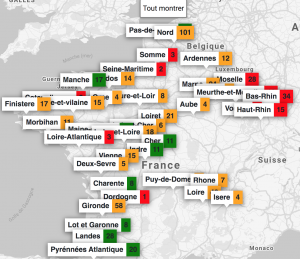

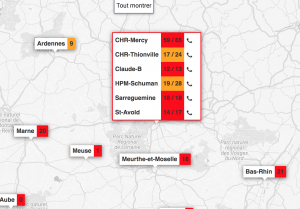

One of the major challenges during the health crisis was the availability of resuscitation beds equipped with ventilators. To deal with this problem, we created with colleagues from Inria and a team of computer scientists, clinicians and researchers, ICUBAM as an operational tool for resuscitators to monitor and visualize bed availability in real time. Resuscitators directly provided data on available beds as well as demographic data on mortality, entries and transfers on their mobile phone (in less than 10 seconds) and got an updated visualization on the map of France.

The project described in an article, in an interview and in slides, is the result of a personal initiative of a resuscitator (A. Kimmoun) from the Grand Est region severely affected by the crisis.

In less than two weeks, all intensive care units in this region were overwhelmed and beds were created spontaneously. Official information systems were unable to provide reliable and up-to-date information on bed availability and this information was crucial for efficient allocation of patients and resources. Due to my collaborations with a network of resuscitators, I organized a meeting on March 22, 2020 with colleagues Gabriel Dulac Arnold, Olivier Teboule and A. Kimmoun and we worked 7 days a week for several weeks on the project building an inter-disciplinary team (Laurent Bonnasse-Gahot EHESS, Maxime Dénès Inria, Sertan Girgin Google Research, François Husson CNRS, IRMAR, Valentin Iovene Inria, François Landes Université Paris-Saclay, Jean-Pierre Nadal CNRS & EHESS, Romain Primet Inria, Frederico Quintao Google Research, Pierre Guillaume Raverdy Inria, Vincent Rouvreau Inria, Roman Yurchak). ICUBAM was deployed on March 25 with the agreement of the ARS (regional health agency) EST and was used by 40 departments, 130 resuscitation services and covered more than 2,000 resuscitation beds.

The success of ICUBAM can be explained on the one hand by the quality of the data collected (directly at the bedside) which allows a real-time inventory and a modelling of the evolution of the epidemic to inform patients, health authorities and practitioners and on the other hand by a team with complementary skills working with caregivers and institutions.

Distributed matrix completion for medical databases

This started as a joint work with Geneviève Robin (CNRS Researcher), François Husson (Professor at Agrocampus Ouest) and Balasubramanian Narasimhan (Senior Researcher at Stanford University) and Anqi Fu (Phd Student at Stanford). Personalized medical care relies on comparing new patients profiles to existing medical records, in order to predict patients treatment response or risk of disease based on their individual characteristics, and adapt medical decisions accordingly. The chances of finding profiles similar to new patients, and therefore of providing them better treatment, increase with the number of individuals in the database. For this reason, gathering the information contained in the databases of several hospitals promises better care for every patient. However, there are technical and social barriers to the aggregation of medical data. The size of combined databases often makes computations and storage intractable, while institutions are usually reluctant to share their data due to privacy concerns and proprietary attitudes. Both obstacles can be overcome by turning to distributed computations, which consists in leaving the data on sites and distributing the calculations, so that hospitals only share some intermediate results instead of the raw data. This could solve the privacy problem and reduce the cost of calculations by splitting one large problem into several smaller ones. The general project is described in Narasimhan et. al. (2017). As it is often the case, the medical databases are incomplete. One aim of the project is to impute the data of one hospital using the data of the other hospitals. This could also be an incentive to encourage the hospitals to participate in the project and to share their summaries of their data. This project is continued with Claire Boyer, Aurélien Bellet, Marco Cuturi.

Missing Values: more ressources on Rmistatic

The problematic of missing values is ubiquitous in data analysis. The naive workaround which consists in deleting observations with missing entries is not an alternative in high dimension as it will lead to the deletion of almost all data and huge biais. The methods available to handle missing values depend on the aim of the analysis, the pattern of missing values and the mechanism that generates missing values. Rubin in 1976 defined a widely used nomenclature for missing values mechanisms: missing completely at random (MCAR) where the missingness is independent of the data, missing at random (MAR) where the probability of being missing depends only on observed values and missing not at random (MNAR) when the probability of missingness then depends on the unobserved values. Large part of the literature focuses on MCAR and MAR.

Contribution on MNAR data (identifiability and estimation):

- Aude Sportisse, Claire Boyer, Julie Josse. Estimation and Imputation in Probabilistic Principal Component Analysis with Missing Not At Random Data NeurIPS 2020.

- Aude Sportisse, Claire Boyer, Julie Josse. Imputation and low-rank estimation with Missing Not At Random data 2019-2020, Statistics & Computing.

Many statistical methods have been developed to handle missing values (Little and Rubin, 2019; van Buuren, 2018) in an inferential framework, i.e. when the aim is to estimate parameters and their variance from incomplete data. One popular approach to handle missing values is imputation, which consists in replacing the missing values by plausible values to get a completed data that can be analyzed by any methods. Mean imputation is the worst thing that can be done in an inferential framework as it distorts joint and marginal distribution. One can either impute according to a joint model or using a fully conditional modelling approach. Powerful methods include imputation by random forest (using misforest package) but also imputation by low rank methods (using missMDA, softimpute packages) and recently using optimal transport.

Contribution on imputation methods (with SVD based methods, optimal transport):

- Muzellec, B., Josse, J. Boyer, C. & Cuturi, M. (2020) Missing Data Imputation using Optimal Transport. ICML2020.

- Pavlo Mozharovskyi, Julie Josse, François Husson. (2020). Nonparametric imputation by data depth. Journal of the American Statistical Association, 2020. Vol 115(529) 241-253.

- Husson F., Josse J., Narasimhan B., Robin, G. (2019) Imputation of mixed data with multilevel singular value decomposition. Journal of Computational and Graphical Statistics 28(3).

- Audigier, V. Husson, F. Josse, J. (2016). A principal components method to impute missing values for mixed data. Advances in Data Analysis and Classification, 10(1), 5-26.

A single imputation method can be interesting in itself if the aim is to predict as well as possible the missing values (to do matrix completion). Nevertheless, even if we manage to impute by preserving as well as possible the joint and marginal distribution of the data, a single imputation can not reflect the uncertainty associated to the prediction of missing values. To achieve this goal, multiple imputation (MI) (van Buuren, 2018 in the mice package) consists in generating several plausible values for each missing data (to reflect the variance of prediction given observed data and imputation model) leading to different imputed data sets. Then, the analysis is performed on each imputed data sets and results are combined so that the final variance takes into account the supplement variability due to missing values.

Contribution on multiple imputation methods (based on low rank methods):

- Audigier, V. Husson, F. Josse, J. (2017). MIMCA: Multiple imputation for categorical variables with multiple correspondence analysis. Statistics and Computing, 27(2), 501-518.

- Audigier, V., Husson, F. and Josse, J. (2015). Multiple Imputation with Bayesian PCA. Journal of Statistical Computation and Simulation.

- Josse, J., Husson, F. and Pagès, J. (2011). Multiple imputation in PCA. Advances in data analysis and classification.

An alternative to handle missing values consists in modifying estimation processes so that they can be applied to incomplete data. For example, one can use the EM algorithm to obtain the maximum likelihood estimate (MLE) despite missing values. This is implemented for instance for regression and logistic regression in the R package misaem.

Contribution on methods to do inference with missing values (logistic regression, variable selection):

- M. Bogdan, W. Jiang, J. Josse, B. Miasojedow and V. Rockova. (2021). Adaptive Bayesian SLOPE – High dimensional Model Selection with Missing Values. In revision in JCGS.

- Jiang, W., Lavielle, M. Josse, J. and T. Gauss. (2019). Logistic Regression with Missing Covariates — Parameter Estimation, Model Selection and Prediction within a Joint-Modeling Framework. CSDA

Contribution on methods to do exploratory data analysis with missing values; combine estimation and imputation (PCA with missing values):

- How to perform a PCA with missing values? How to impute data with a PCA?. François Husson Youtube playlist

- Josse, J and Husson, F. (2015) missMDA a package to handle missing values in and with multivariate data analysis methods. Journal of Statistical Software.

- Josse, J and Husson, F. (2013) Handling missing values in exploratory multivariate data analysis methods.

- Husson, F. and Josse, J. (2011) Handling missing values in Multiple Factor Analysis. Food Quality and Preferences.

Finally, for supervised learning with missing values, where the aim is to predict as well as possible an outcome and not to estimate parameters as accurately as possible, the solutions are very different. With our group, we have suggested new approaches to tackle this issue. For instance, we show that the solution which consists in imputing the train and the test set with the means of the variables in the train set, even if this is not appropriate for estimation is consistent for prediction. We have also studied solution to do random forest with missing values, which consists in using the missing incorporated in attributes criterion (implemented in scikitlearn in HistGradientBoosting) and in the R grf package. Finally, we have developed new methods theoretically justified to do neural nets with missing entries.

Contribution on supervised learning with missing values (SGD, random forest, neural nets):

- Le Morvan, J. Josse, E. Scornet. & G. Varoquaux. What’s a good imputation to predict with missing values?

Neurips 2021. (Spotlight). - A. Sportisse, C. Boyer, A. Dieuleveut, J. Josse. Debiasing Stochastic Gradient Descent to handle missing values. Neurips2020.

- Le Morvan, J. Josse, M., Moreaux, T, E. Scornet. & G. Varoquaux. Neumiss networks: differential programming for supervised learning with missing values. Neurips2020. (Oral)

- Le Morvan, M., N. Prost, J. Josse, E. Scornet. & G. Varoquaux Linear predictor on linearly-generated data with missing values: non consistency and solutions. AISTAT2020.

- Josse, J., Prost, N., Scornet, E. & Varoquaux, G. On the consistency of supervised learning with missing values.